How to Efficiently Organize and Manage Large Files

Introduction

Whether you’re dealing with RAW photos, 4K video projects, massive datasets, or large design files, the tasks of organizing and managing large files can challenge even the most seasoned professionals. Overgrown directories, slow system performance, constant disk space alerts, and difficulty locating data can stall productivity and cause headaches. Yet, with the right planning, tools, and processes, you can maintain efficient workflows, preserve storage capacity, and keep your digital workspace uncluttered.

In this comprehensive guide, we’ll explore best practices for structuring folders, naming conventions, metadata management, archiving methods, collaborative strategies, and advanced tools to help you effectively wrangle huge files. Whether you’re a creative professional, data analyst, or a casual user storing personal media, these principles will ensure large files remain accessible, secure, and easy to track.

1. Why Large File Organization Matters

-

Improved Productivity: Time spent searching for scattered files or dealing with slow system performance can hamper creativity and daily tasks.

-

Cost Savings: Reduced storage costs (cloud subscriptions, extra hard drives) if you avoid unnecessary duplicates or keep only essential data.

-

Collaboration: In large team settings, consistent organization ensures multiple users can find and share massive assets without confusion.

-

Security & Compliance: Proper classification of data helps enforce access controls, preventing unauthorized usage.

-

Future-Proofing: Laying down robust organizational schemes now prevents bigger data chaos as your file library grows further.

Real-World Example: A video production company improved editing turnaround times by standardizing folder hierarchies and adopting specialized file management software, cutting time spent searching for footage by 40%.

2. Evaluating Your File Management Needs

Before developing a system for managing large files, clarify your unique requirements:

-

File Types & Sizes: Are you dealing with 100 GB 4K video footage, multi-gigabyte datasets, or tens of thousands of 20 MB photographs?

-

Frequency of Use: How often do you open/edit these files? Infrequent usage suggests archival or offline storage.

-

Collaboration: Do you work solo or in a multi-user environment requiring real-time or asynchronous collaboration?

-

Performance Constraints: Are you accessing these files locally, over a NAS, or via the cloud? How important is read/write speed?

-

Growth Projections: Are your data volumes expanding rapidly? Will you require more capacity and advanced indexing soon?

Scenario: A data scientist capturing daily logs that accumulate tens of gigabytes requires a different strategy than a photographer storing 5 TB of wedding archives.

3. Folder Structure and Naming Conventions

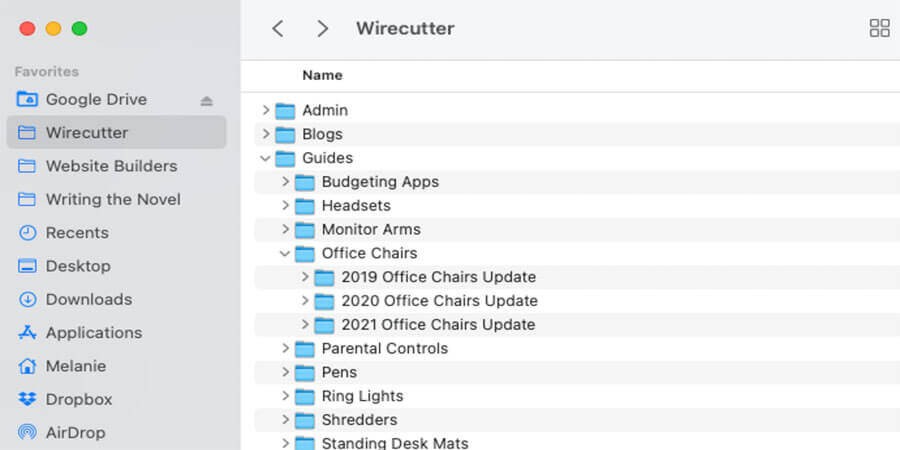

3.1 Hierarchical Organization

-

Top-Level Categories: Group files by project, department, year, or major content type (e.g., "Projects," "Archives," "Clients").

-

Nested Folders: Subdivide further by date, version, or sub-project. Keep depth manageable—avoid 10-level hierarchies if possible.

-

Balance: If you store thousands of large files in a single folder, directory listing and searching may slow. Splitting them across subfolders can help.

3.2 Naming Conventions

-

Include Key Info: e.g.,

ProjectName_YYYYMMDD_Version.fileextension. -

Avoid Special Characters: Some systems or cloud services may choke on them.

-

Keep it Concise but Descriptive: Enough detail to identify the file’s content without overly long filenames.

-

Versioning: For iterative work (like video or images), append

_v1, _v2or date-based suffix to differentiate.

3.3 Consistency

-

Document your folder and naming conventions in a short guideline or "readme." Ensure all team members adopt it.

-

If working alone, define a personal style that you’ll remember and stick to it.

Pro Tip: For large-scale or departmental systems, create a reference chart explaining the folder structure and naming rules so newcomers can adapt quickly.

4. Metadata and Tagging Strategies

Large collections benefit significantly from robust metadata:

-

Embedded Metadata: For images, videos, or audio, embed metadata (EXIF/IPTC/XMP) describing content, location, tags, etc.

-

Tagging Systems: Operating systems (Windows, macOS) or third-party tools let you assign color labels or keywords to files.

-

Databases and Catalog Software: Tools like Adobe Lightroom (for photos), Bridge, or specialized asset management solutions can store extended metadata and facilitate complex searches.

Benefits:

-

Quick filtering by project, date, camera type, or content without rummaging through folders.

-

Minimal renaming required if robust tags are present.

Example: A photography studio uses Lightroom catalogs, assigning keywords for subject, location, and client. Searching “2019 wedding client Smith” instantly yields relevant RAW files among tens of thousands.

5. Storage Media Choices for Large Files

5.1 Direct-Attached Storage (DAS)

-

External HDDs/SSDs: USB or Thunderbolt. Great for single-user, local usage. Upfront cost, no subscription.

-

Pros: High speeds (especially SSD), full control, offline availability.

-

Cons: Risk of failure or theft, must manage your own backups, limited collaboration.

5.2 Network-Attached Storage (NAS)

-

LAN-Based: Devices like Synology or QNAP. Multi-drive RAID can offer redundancy.

-

Pros: Central repository for large teams or multi-device use, decent local network speeds.

-

Cons: Setup complexity, cost for hardware, potential network bottlenecks.

5.3 Cloud Storage / Cloud NAS

-

Providers: Google Drive, OneDrive, Dropbox, Amazon S3. Also specialized media servers.

-

Pros: Offsite redundancy, easy remote collaboration, scalable storage.

-

Cons: Monthly fees, reliant on stable internet, potential performance lags.

5.4 Hybrid Approaches

-

Local + Cloud: Keep actively used large files on local DAS or NAS for speed, auto-sync or replicate older/larger archives to cloud.

Advice: For extremely large file sets (multi-terabytes), local solutions provide raw speed. Cloud is ideal for collaboration, remote access, and offsite backup.

6. Overcoming File Transfer Bottlenecks

Large file transfers can be time-consuming. Minimizing friction is crucial:

-

Use Fast Connections

-

For local file moves, USB 3.2 Gen 2 or Thunderbolt can achieve 1,000 MB/s+. On a network, ensure gigabit or 10GbE if possible.

-

-

Multi-Threaded Tools

-

Command-line utilities like

rsync(Linux/macOS) or third-party software (TeraCopy, Robocopy) can expedite big transfers or handle interruptions gracefully.

-

-

Compression or Splitting

-

Tools like 7-Zip to compress or chunk giant files into parts can simplify uploads or backups.

-

Watch out for CPU overhead.

-

-

Scheduled Transfers

-

Running large syncs or backups overnight to free bandwidth during work hours.

-

Scenario: A post-production team uploads 50 GB of daily footage to the cloud. They combine incremental compression + TeraCopy’s error-checking, scheduled to run at midnight.

7. Archiving Old Files

7.1 Why Archive?

-

Rarely Accessed: Freed up prime storage for current projects.

-

Protect Historical Data: Maintain a record of completed works.

-

Legal/Compliance: Some industries require multi-year retention.

7.2 Archiving Methods

-

Offline Drives: Label external HDDs with date/year, store in stable conditions.

-

Tape Storage: LTO tapes remain cost-effective for long-term large data archiving in enterprise contexts.

-

Cold Cloud Storage: AWS Glacier, Azure Archive, or Google Coldline for deep archives with lower monthly costs but slower retrieval.

7.3 Archival Organization

-

Keep a separate folder or drive named “Archive_[YearRange].”

-

Maintain a concise index or spreadsheet describing archived sets for quick retrieval.

Pro Tip: For safety, store at least one archive copy offsite. Archival drives kept in the same building can be lost in a single disaster.

8. Version Control for Large Files

While version control systems (e.g., Git) are typically used for code, they can handle large binary files with certain add-ons:

-

Git LFS (Large File Storage)

-

Stores large files outside the normal Git repo, preventing monstrous clone sizes.

-

Great for game development or design teams with large assets.

-

-

SVN / Perforce

-

Traditional enterprise solutions with partial checkouts, beneficial for huge assets.

-

-

Cloud Collaboration

-

Services like Dropbox and OneDrive keep older versions of files, enabling reverts.

-

Advice: Evaluate if the overhead of a code-based version system is worth it for your media or data. For simpler needs, a standard incremental backup + well-labeled folder approach might suffice.

9. Collaborative Editing of Massive Projects

-

Shared NAS

-

Multiple users can access the same large project on local network speeds. Ideal for offices with RAID-protected servers.

-

-

Cloud Collaboration

-

Tools like Frame.io or Adobe’s Creative Cloud libraries for video, design.

-

Real-time sync might be slow for huge files if internet is average.

-

-

Partial Sync or Proxy Media

-

Video editing workflows often involve generating lower-resolution proxy files for remote editors, while high-res masters remain on local storage.

-

Scenario: A film production uses a 10GbE NAS for local editing speed, plus a cloud proxy workflow for remote colorists who can’t handle full raw 8K footage.

10. Automating File Organization

10.1 Scripting & Tools

-

Command-Line:

mv,cp,rsyncfor Linux/macOS, orrobocopyfor Windows. Scripting daily tasks (like sorting files by date or size). -

Hazel (macOS) or File Juggler (Windows): Watches folders, auto-renames or moves files based on rules (date, keywords, etc.).

10.2 Tagging & Sorting

-

Tools like Adobe Bridge can auto-apply metadata tags, move files to relevant subfolders.

-

Fancy flow-based apps (Zapier, IFTTT) can watch cloud folders and do certain actions.

10.3 Bulk Renamers

-

Tools like Advanced Renamer, Bulk Rename Utility, or NameChanger expedite consistent naming across large sets.

Pro Tip: Automation ensures newly added or generated files don’t languish in a chaotic “misc” folder, saving future headaches.

11. File Search and Indexing Strategies

With thousands of large files across multiple drives, searching by name alone might be slow:

-

OS Search Indexers

-

Windows Search or macOS Spotlight can index file names, content, and tags. Keep them properly configured.

-

Large media might need specialized indexing or rely on partial metadata.

-

-

Third-Party Search Tools

-

Everything (Windows) indexes NTFS volumes instantly.

-

Alfred or LaunchBar (macOS) can provide faster, more configurable searching.

-

DocFetcher or Recoll for content-based indexing.

-

-

Database Catalog Software

-

For large photo/video libraries, using specialized catalogs (Lightroom, Capture One, Bridge) is crucial.

-

Scenario: A design agency with 500K images uses Adobe Bridge for tagging and searching across multiple NAS volumes. Meanwhile, smaller teams or home users might be fine with OS-level search.

12. Dealing with Duplicate and Redundant Files

Duplicate files bloat storage and cause confusion:

-

Duplicate File Finders

-

Tools like dupeGuru, Gemini 2 (macOS), or CloneSpy (Windows). They scan for identical or similar content.

-

-

Consolidate & Delete

-

Carefully confirm duplicates before removal. Keep the best or most recent version.

-

-

Use Single Master Copies

-

For large assets, store them in a central location and reference them instead of scattering multiple duplicates across projects.

-

Pro Tip: Evaluate duplication carefully—some duplicates might be partial differences (like different edits). Mark them distinctly or keep them in versioned folders.

13. Archival Compression and Containers

13.1 Compressed Archives

-

7-Zip, WinRAR, or zip files can unify multiple large files or compress them for storage.

-

Helps reduce space if files contain redundancies or if you can accept some compression overhead.

13.2 Self-Extracting Archives

-

Eases distribution. Recipients open a single .exe or .dmg to expand everything.

-

Not always recommended for extremely large sets due to final file size.

13.3 Container Formats

-

Disk image formats (.iso, .dmg) or encrypted containers (VeraCrypt, TrueCrypt) can hold large sets with optional encryption.

Scenario: A game developer archives older project builds as solid .7z archives, significantly reducing the size of repeated textures or code.

14. Maintaining Performance with Large Files

-

Defragment or Optimize Disks

-

For HDDs, defragmentation can speed up file reads if large files are scattered.

-

SSDs prefer TRIM operations, not defrag.

-

-

Sufficient RAM

-

Opening or editing huge images or 4K video can demand large memory, preventing overreliance on slow virtual memory.

-

-

Use Proxies

-

In video or 3D workflows, proxy media or smaller preview files accelerate editing while maintaining references to the full-res versions.

-

-

Upgrade to Fast Interfaces

-

USB 3.2 Gen 2 or Thunderbolt 3/4 can drastically reduce large file transfer times.

-

Advice: If performance lags, identify if the bottleneck is CPU, RAM, or disk speed. Upgrading or re-optimizing the weakest link yields big gains.

15. Collaboration with Remote Teams

15.1 Cloud Solutions

-

Dropbox, Google Drive, OneDrive can handle large file sync, but watch out for size or bandwidth limits.

-

For huge data, specialized platforms (e.g., Signiant Media Shuttle) or hosting on AWS S3 might be better.

15.2 NAS with Remote Access

-

Setting up a VPN or using a secure remote file access tool. Performance depends on network speeds.

15.3 File Locking and Version Control

-

Tools that lock a file while one user edits it (preventing merges) or keep version history if multiple are editing.

Pro Tip: For large media, consider solutions that offer partial check-outs or proxy editing. Full downloads for each minor revision can be painfully slow.

16. Data Security for Large Files

-

Encryption

-

At rest: On external drives or local volumes, tools like BitLocker, FileVault, or VeraCrypt.

-

In transit: SFTP/FTPS or VPN for remote file moves.

-

-

Access Controls

-

Role-based access for shared repositories, ensuring not everyone sees massive confidential assets.

-

-

Backup

-

Don’t forget multi-terabyte backups. If your entire drive is huge, you may need multiple backup drives or services.

-

Scenario: A 3D animation studio ensures their 8 TB project files are on a secured NAS (RAID 5 + encryption), with cloud replication to a zero-knowledge service. Only the IT admin and team lead hold encryption keys.

17. Maintaining a Clean and Future-Proof System

17.1 Regular Audits

-

Schedule quarterly checks of large-file directories for duplicates, obsolete versions, or completed projects that can be archived.

-

Freed space improves performance and reduces clutter.

17.2 Migrate to Upgraded Storage

-

As data grows, be ready to expand from a single external HDD to a multi-bay NAS or higher cloud plan.

-

Evaluate new or advanced file systems (like ZFS, APFS) for better large file handling.

17.3 Document Your Strategy

-

Team environment: a brief Standard Operating Procedure (SOP) on how to name, store, and archive large files.

Pro Tip: Resist hoarding all versions forever. A careful culling process can drastically reduce storage overhead while preserving essential milestones.

18. Putting It All Together

Example Workflow:

-

Plan: A video editor with 2 TB of daily footage invests in a local 10 TB RAID NAS for immediate project access, plus monthly archivals to an 8 TB external drive.

-

Structure: The top-level folder is “Projects,” subdivided by year and project name. Each project folder has “Footage,” “Audio,” “Exports,” “Proxy,” “Archive.”

-

Automation: Scripts run daily to rename and move new footage from a “CameraDump” folder into the correct subfolder, appending date/time.

-

Versioning: Exports are named “ProjectName_v1,” “ProjectName_v2.” The user keeps only final or near-final versions beyond v3.

-

Collaborate: Upload compressed proxy media to a shared cloud folder for remote colorist. Full-res remains local.

-

Backup: Weekly, the NAS is snapshot-backed up to the external drive. Offsite copy is updated monthly to a second drive stored at home.

Outcome: The editor finds it easy to locate final cuts or raw materials, works quickly with local speeds, and knows if the NAS fails, backups exist for restoration.

Conclusion

Managing large files efficiently doesn’t have to be an overwhelming chore. By constructing a well-organized folder hierarchy, employing meaningful naming conventions, and leveraging metadata, you lay a solid foundation. Next, choosing suitable storage media—whether direct-attached drives, NAS solutions, cloud platforms, or a hybrid approach—gives you the performance, redundancy, and accessibility you need.

Automation (through scripts or specialized tools) helps maintain consistent file organization as new data pours in, while robust archiving ensures rarely accessed but still valuable files don’t hog prime storage. Tagging, indexing, and powerful search capabilities speed up your workflow, letting you quickly find or share large assets. Meanwhile, protecting these files with backups and encryption keeps your valuable data secure.

Ultimately, an effective large file management strategy evolves with your needs. As volumes grow or collaboration demands shift, be prepared to scale up storage solutions or refine your processes. By applying the principles outlined in this guide—planning your folder structures, employing metadata and version control, archiving methodically, and combining the right hardware or cloud resources—you’ll keep your system streamlined. No matter how massive your files get, you’ll have the clarity and tools to manage them confidently, without drowning in digital clutter.

Popular articles

Comments (0)